My NAS is full and I have been looking to add more storage without breaking the bank, i.e. I can't justify buying a second NAS. I also can't really just replace the disks in the existing NAS, while it's technically possible it requires a rebuild of the array for each drive replaced, and the extra capacity can only be used in a new volume or once all 12 drives have been replaced. In short, it's a bit risky.

Enter Proxmox and relatively affordable HBA (Host Bus Adapter) cards.

Since I already have a Proxmox cluster setup, it would be ideal to take advantage of some of that hardware, and it turns out with the addition of an HBA that's entirely possible. The reason we need an HBA is because we don't want Proxmox to manage the new disks in the NAS VM. It is important that whatever NAS system is chosen has direct access to the underlying hardware rather than just block file access. By default Proxmox will only provide these virtualized disks to guest operating systems, and to get device level access we need to pass through the entire controller to the guest.

We can't pass the onboard one because Proxmox needs access to it for its own storage, so this is where the HBA comes in, it's another bit of discrete hardware that isn't relied on by the host Proxmox system. I picked up an LSI SAS 9212-4i4e for a bit of a bargain from the local marketplace that someone else had for a similar project (this means it was already in IT mode, which I have read is a requirement for things to work smoothly but unsure how to change it).

I also picked up this biggest investment for this project, a 20TB Western Digital Ultrastar DC HC560, just the one for prototyping and storing easily replaceable or otherwise backed up data now but with capacity to add more when needed.

Setting Up Proxmox

There are a few tweaks that need to be made to Proxmox (well specifically the underlying Debian) to make the PCIe passthrough work properly. The instructions for AMD and Intel based systems are different, I'm using an older Intel based system for this with the following specifications:

- CPU: Intel Core i7 6700k

- Motherboard: ASUS Z170 Deluxe

- Memory: 32GB DDR4 memory

- Storage: 250GB Samsung EVO 850 (OS) & 500GB Samsung EVO 860 (VMs)

- HBA: LSI SAS 9212-4i4e

On some boards it looks like IOMMU options are separate, but on my ASUS they're all included under the VT-d virtualization options that need to be enabled for Proxmox to work in the first place, so no BIOS changes were needed.

This website (in French) was a useful resource for some of the common ASUS and MSI BIOS configurations

All of the changes needed are within the operating system, as follows.

Grub config

Edit /etc/default/grub and update the line:

1GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt"Save, and run update-grub

Enable the VFIO kernel modules

Edit /etc/modules and add the following lines to the bottom of the file:

1vfio

2vfio_iommu_type1

3vfio_pci

4vfio_virqfdSave changes and run update-initramfs -u

Reboot

The system should reboot normally and appear to be working as it was before.

Setup the guest VM (TrueNAS Scale)

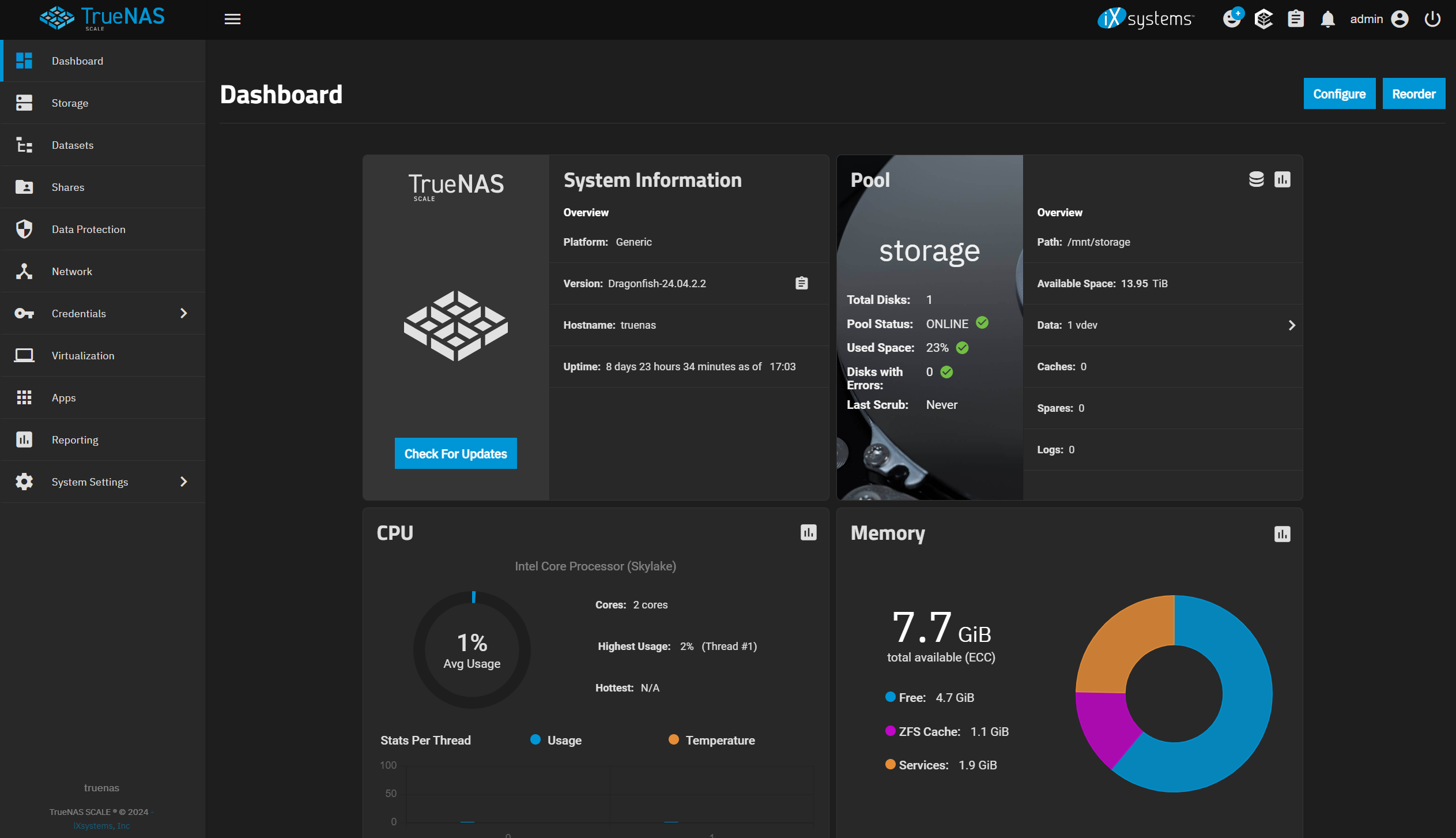

For the system running the NAS, I decided to try out the ever popular TrueNAS Scale (I chose Scale over Core because it's based on Debian which I am familiar with, and seems to be getting a lot of the newer features).

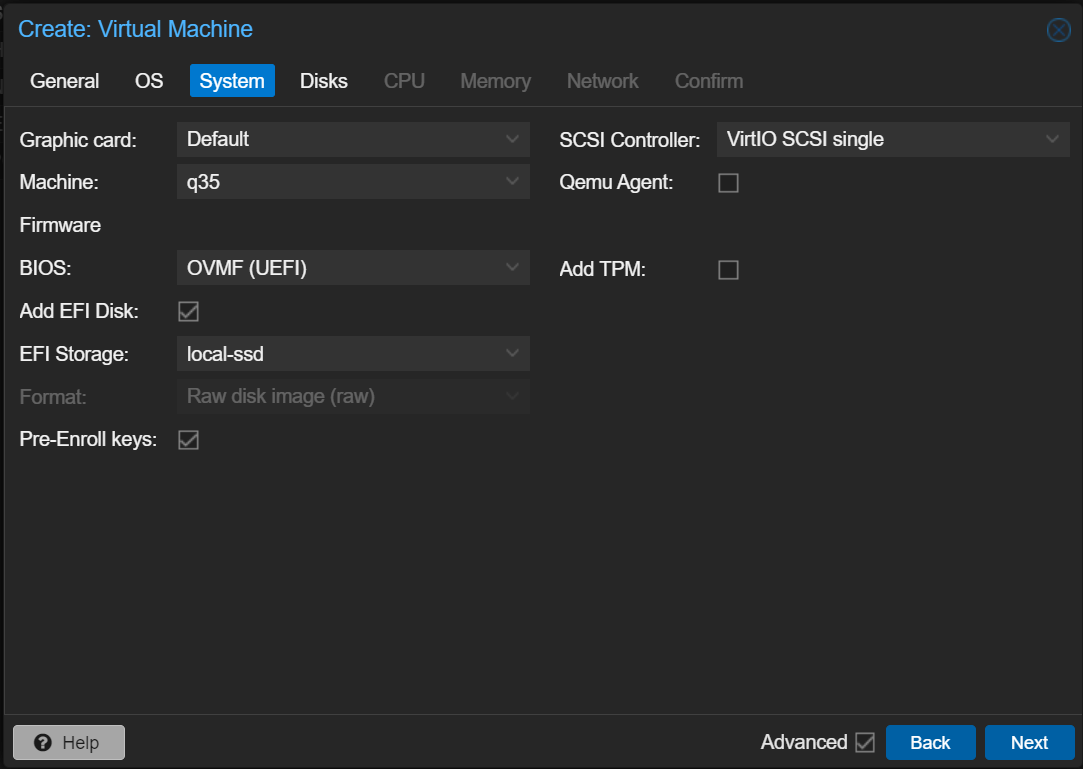

Setting up the guest machine in Proxmox was mostly follow-your-nose as it normally is, with a few key changes. In the System setup the following settings need to be changed from their defaults:

- Machine: Change to

q35 - BIOS: Change to

OVMF (UEFI)- Change EFI Storage to your regular VM storage (

local-ssdin my case)

- Change EFI Storage to your regular VM storage (

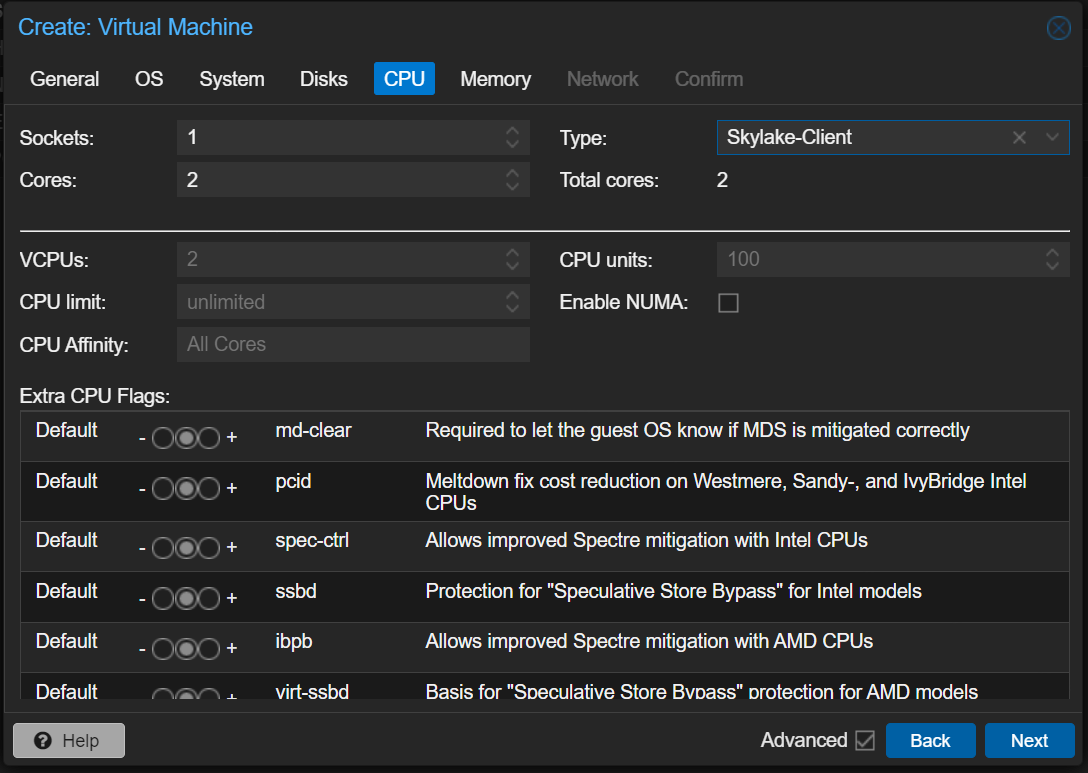

Then under CPU we need to make another change to set the CPU Type (which is set to Skylake-Client as my host CPU is a Skylake series CPU).

For memory I gave it 8GB of RAM, and network was similarly standard and just placed on my storage network.

Adding the HBA

Now that the basic VM is setup, we can add the HBA card to the VM, instructing Proxmox to pass it directly through. In the Hardware options of the VM, add a PCI Device to your VM, selecting the HBA from the dropdown:

![Proxmox dialog for adding a PCI device. The main dialog is obscured by the Device dropdown menu, with the Broadcom/LSI SAS2008 PCI-Express Fusion-MPT SAS-2 [Falcon] option highlighted](https://static.aramsay.co.nz/content/2024/09/image-2.png)

You need to ensure that both All functions and PCI-Express are checked as well.

Booting the VM

This is where I had issues, the VM simply wouldn't load the installer and dumped me into a UEFI recovery console. This was because be default the OVMF BIOS enables secure boot to support windows guest machines. This needs to be disabled for TrueNAS (or other Linux distros) to boot.

To disable secure boot, open the Console, start the VM and mash escape when prompted to enter the VM BIOS, from here you should be able to disable secure boot, save changes and reboot.

Installing TrueNAS

Once booted into the installer, follow the steps to get TrueNAS installed. The main step to pay attention to is the drive target, TrueNAS can be installed across multiple disks (in a mirror configuration), you will want to make sure you select the virtualized VM disk here and not the 20TB disk it should see attached to the HBA. That disk will be used to create a Storage Pool and VDEV later on.

I'm not going to go into detail about how to setup TrueNAS as there are plenty of other resources around for that, and I wouldn't consider myself any kind of expert on that topic as this is the first time I have used it, but all going well you should end up in the web interface where you can configure a storage pool 🎉